Artificial Intelligence (AI) has rapidly evolved from a niche research field into the driving force behind today’s technological revolution. From large language models (LLMs) powering generative AI tools to recommendation engines, self-driving cars, and predictive analytics, AI workloads are everywhere. These workloads are hungry for compute power — requiring massive clusters of GPUs and TPUs to train and run models.

Artificial Intelligence (AI) has rapidly evolved from a niche research field into the driving force behind today’s technological revolution. From large language models (LLMs) powering generative AI tools to recommendation engines, self-driving cars, and predictive analytics, AI workloads are everywhere. These workloads are hungry for compute power — requiring massive clusters of GPUs and TPUs to train and run models.

But while GPUs tend to capture the headlines, networking is the invisible backbone of AI performance. Without the right interconnect fabric, even the most powerful GPUs sit idle, waiting for data to arrive or synchronizations to complete.

In this article, we’ll explore why networking matters so much in the AI era, and compare three dominant technologies shaping AI clusters today: InfiniBand, RoCE (RDMA over Converged Ethernet), and Cloud Networking.

Why Networking is Critical for AI Workloads

AI training is inherently a distributed computing problem. Training a model like GPT or BERT involves splitting computation across thousands of accelerators. After each step, GPUs must exchange intermediate results — gradients, parameters, activations — with one another. This pattern, known as all-reduce, is highly network intensive.

Key networking requirements for AI include:

- Ultra-low latency → Every microsecond matters. Latency directly impacts synchronization steps like gradient aggregation.

- High bandwidth → With datasets scaling into petabytes, AI clusters need throughput in the hundreds of gigabits per second (Gbps).

- Scalability → Clusters must scale to thousands of GPUs without bottlenecks.

- Lossless communication → Packet drops lead to retransmissions, which kill efficiency in tightly coupled workloads.

- Ecosystem integration → Networking must integrate seamlessly with AI libraries (NCCL, MPI, PyTorch DDP) and orchestration systems (Kubernetes, Slurm).

Without these characteristics, the cost of training skyrockets — as GPUs waste time waiting for the network.

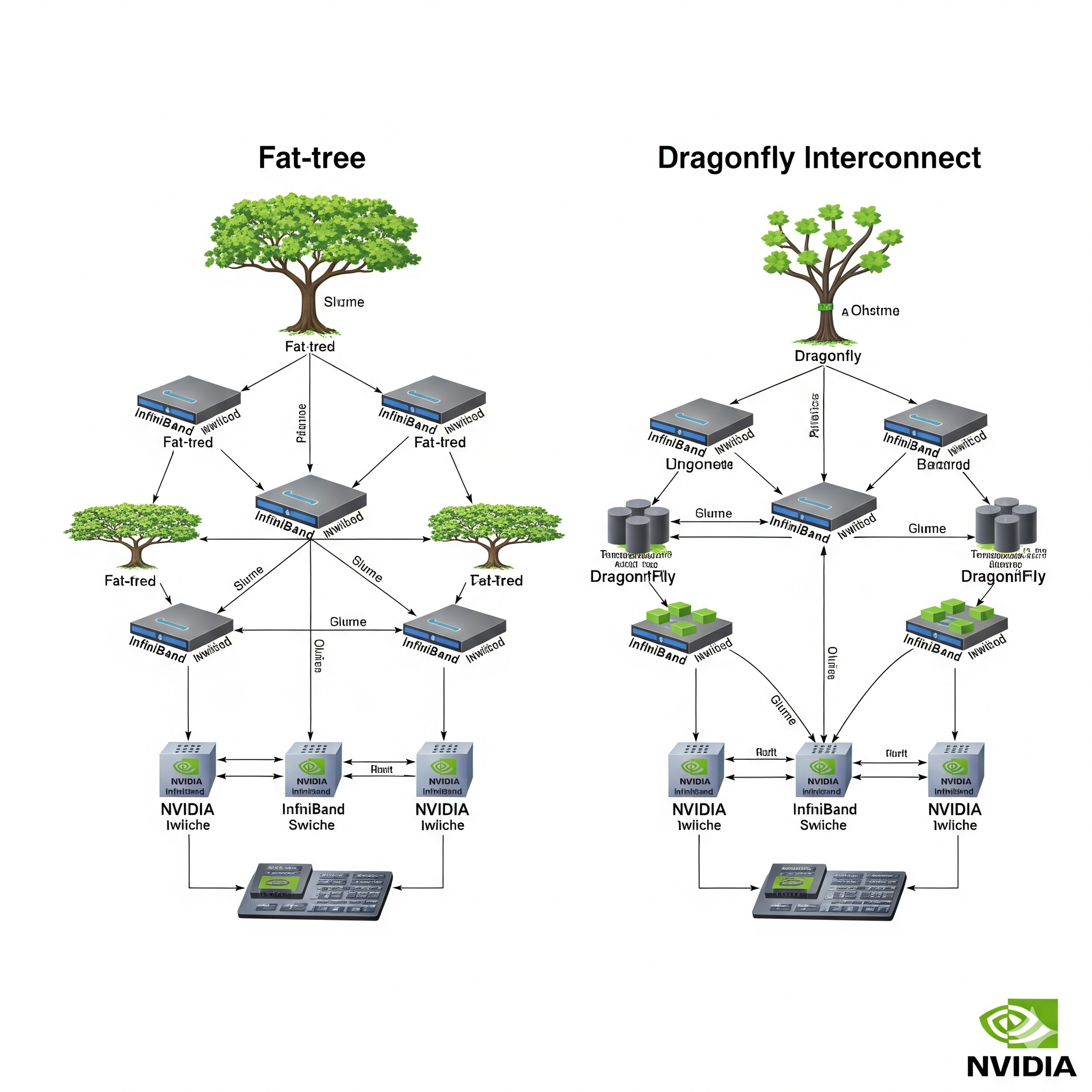

InfiniBand: The Gold Standard for AI Supercomputing

InfiniBand (IB) was born in the high-performance computing (HPC) world and has long been the interconnect of choice for supercomputers. With NVIDIA’s acquisition of Mellanox, InfiniBand is now deeply tied to the AI ecosystem.

Strengths of InfiniBand

- Unmatched latency: Typically <1µs — critical for collective operations like all-reduce.

- Extreme bandwidth: Roadmap to 800 Gbps and beyond.

- In-network compute: Features like SHARP offload collective operations to switches, reducing communication overhead.

- Mature ecosystem: Widely supported in MPI, NCCL, and NVIDIA’s AI software stack.

- Proven track record: Powers top AI clusters like NVIDIA DGX SuperPOD, Meta’s Research SuperCluster, and many systems on the TOP500 supercomputing list.

Limitations of InfiniBand

- High cost: Specialized NICs and switches are expensive.

- Vendor lock-in: Dominated by NVIDIA/Mellanox.

- Niche expertise: Operating and scaling IB requires HPC-style skill sets.

- Limited enterprise adoption: Outside of AI/HPC, IB is rare, making integration harder for general-purpose data centers.

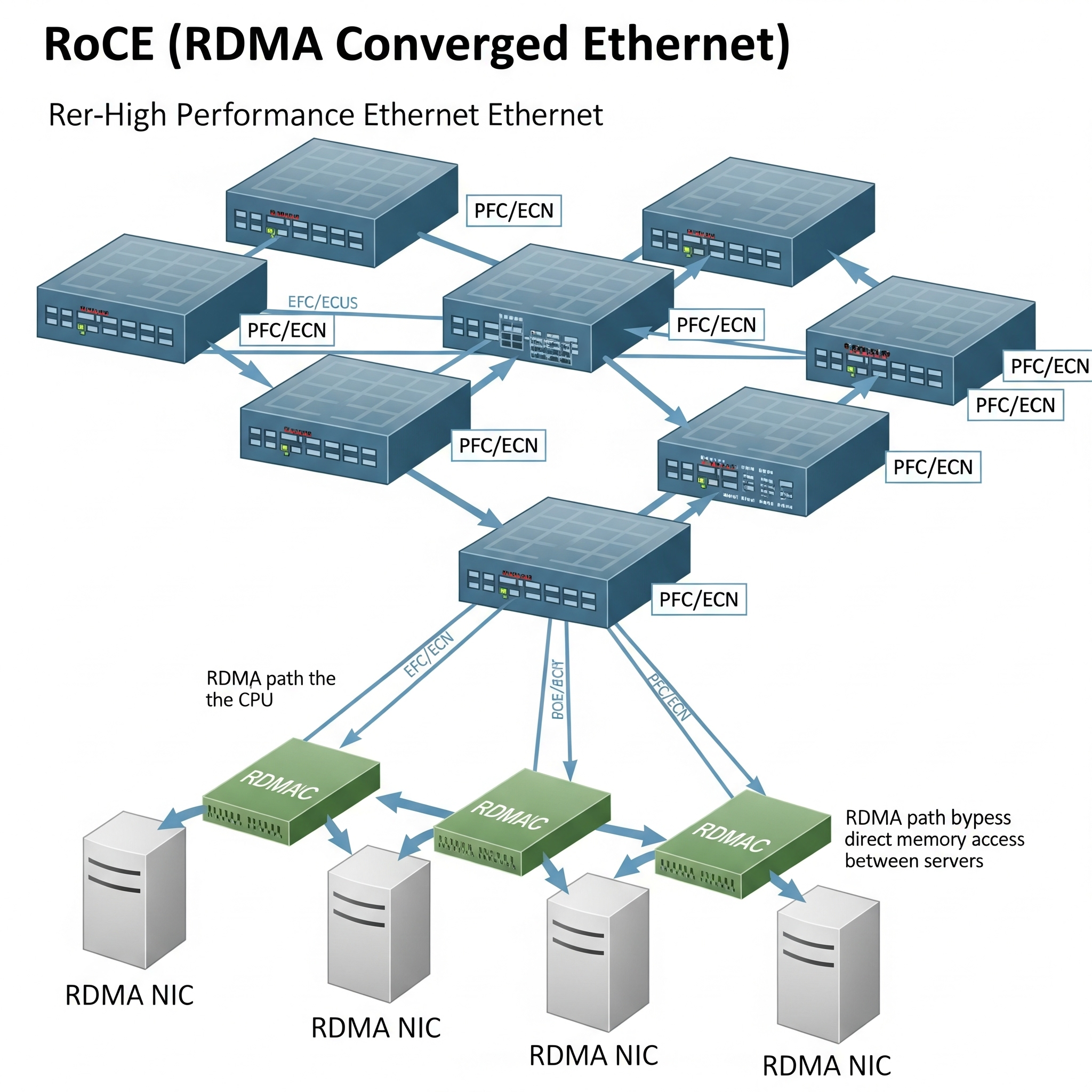

RoCE: Ethernet’s Answer to InfiniBand

RDMA (Remote Direct Memory Access) is the technology that enables one computer to directly access the memory of another without CPU involvement, dramatically reducing latency. RoCE (RDMA over Converged Ethernet) brings this capability to Ethernet — the most widely deployed networking technology in the world.

Strengths of RoCE

- Runs on Ethernet: Leverages existing switching infrastructure.

- Lower cost: Ethernet switches are cheaper than IB fabric.

- Performance: With tuning, RoCE can approach IB-level latency (~1–2µs) and throughput.

- Ecosystem integration: Supported in NCCL, MPI, Kubernetes, and cloud-native stacks.

- Flexibility: Coexists with standard IP traffic on the same Ethernet fabric.

Limitations of RoCE

- Complex tuning required: For lossless operation, features like PFC (Priority Flow Control) and ECN (Explicit Congestion Notification) must be configured carefully.

- Debugging challenges: Performance drops can be subtle and hard to diagnose.

- Vendor dependence: NICs from Mellanox and Broadcom dominate the market.

- Less HPC maturity: While good, RoCE lacks some advanced HPC features (e.g., SHARP).

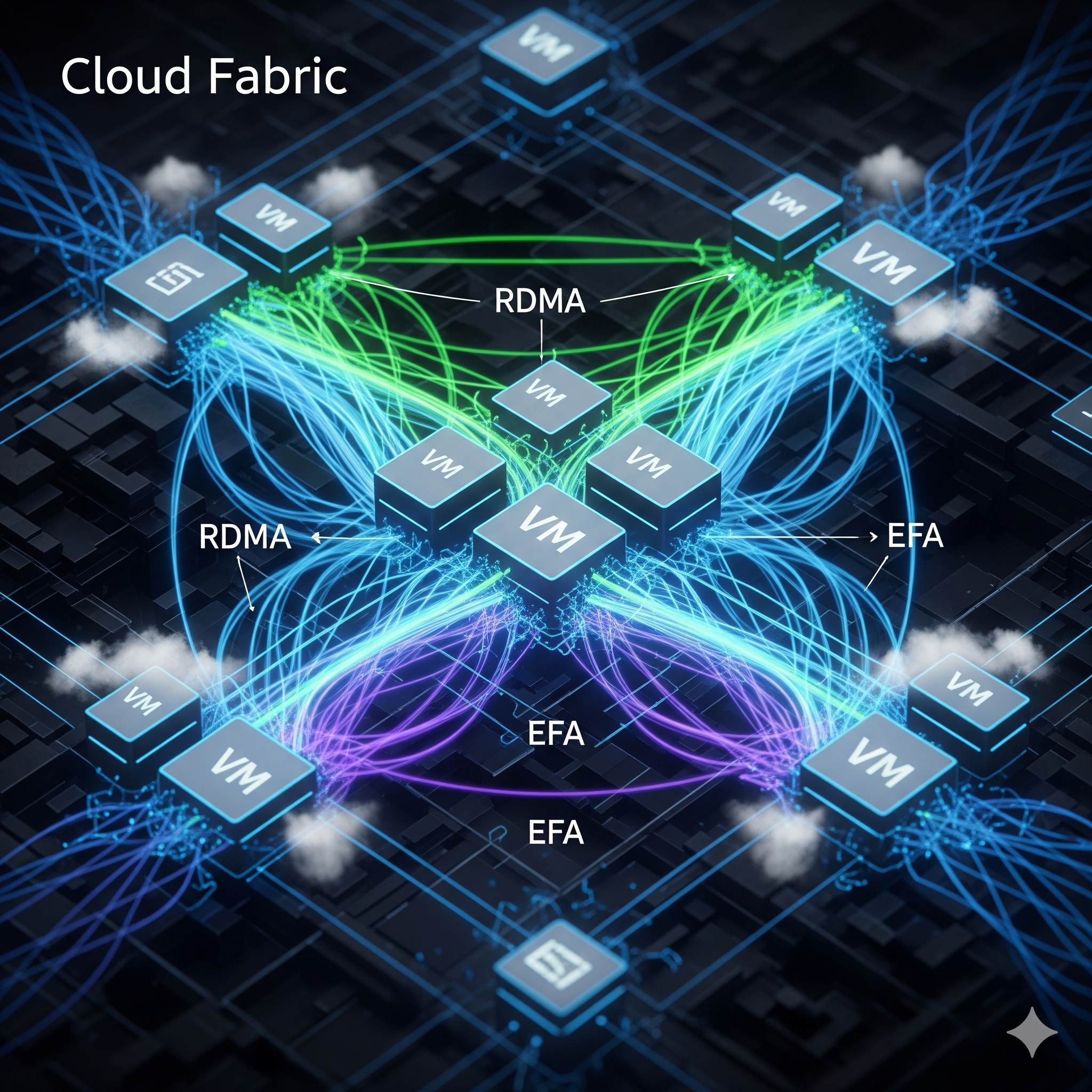

Cloud Networking: Elasticity Over Raw Performance

Not every organization can afford to build or maintain large on-prem clusters. This is where cloud networking comes into play. Hyperscale providers like AWS, Azure, and Google Cloud offer high-speed, RDMA-capable interconnects in their AI/ML instances.

Strengths of Cloud Networking

- Elastic scaling: Spin up thousands of GPUs on-demand.

- Zero CapEx: No need to buy or maintain networking gear.

- Ecosystem integration: Tightly coupled with managed AI services (SageMaker, Vertex AI).

- High speeds: AWS’s Elastic Fabric Adapter (EFA) and Azure’s Infiniband-backed VMs support 200–400 Gbps+.

Limitations of Cloud Networking

- Higher latency: Virtualization adds overhead (10–50µs).

- Bandwidth caps: Not all instance types get top speeds.

- Opaque fabric: Users have little visibility into the underlying network.

- Cost at scale: Renting thousands of GPUs quickly becomes more expensive than owning.

Comparison: InfiniBand vs RoCE vs Cloud

| Feature | InfiniBand | RoCE | Cloud Networking |

|---|---|---|---|

| Latency | <1 µs | 1–2 µs (tuned) | 10–50 µs |

| Bandwidth | 200–800 Gbps | 100–400 Gbps | 100–400 Gbps |

| Scalability | Excellent (HPC) | Very Good (tuned) | Elastic, but costly at scale |

| Cost | High (CapEx) | Medium (CapEx/Opex) | Variable (Opex, can spike) |

| Maturity | HPC, AI-focused | Enterprise + AI hybrid | Cloud-native AI workloads |

| Best Fit | Hyperscale AI R&D | Enterprise AI clusters | Startups, burst workloads |

Use Cases and Deployment Scenarios

InfiniBand

- National supercomputing centers

- Hyperscalers (Microsoft, Meta, NVIDIA)

- AI research labs training trillion-parameter models

RoCE

- Enterprises running private AI clusters

- Hybrid environments (on-prem + cloud burst)

- Telco and edge AI use cases

Cloud Networking

- Startups testing AI models without CapEx

- Short-lived training workloads

- Companies prioritizing agility over cost efficiency

Future Outlook: Convergence on the Horizon

Several trends are shaping the future of AI interconnects:

- Ethernet catching up → With 800G Ethernet on the horizon, the gap between InfiniBand and RoCE may narrow further.

- Cloud fabrics evolving → AWS EFA v2 and Azure’s IB-backed ND-series VMs are pushing cloud performance closer to on-prem.

- AI-driven congestion control → Networks that self-tune using ML are emerging, reducing the pain of manual RoCE configuration.

- Heterogeneous fabrics → Hybrid strategies (cloud for elasticity, on-prem IB/RoCE for steady workloads) will dominate.

- Standardization efforts → Open fabrics initiatives could reduce vendor lock-in and democratize AI networking.

Conclusion

In the age of AI workloads, networking is no longer an afterthought — it is a strategic differentiator.

- InfiniBand remains the gold standard for performance-hungry AI research.

- RoCE offers a pragmatic balance of cost and capability, riding on Ethernet’s ubiquity.

- Cloud networking provides unmatched flexibility, albeit at the cost of absolute performance.

The right choice depends on scale, budget, and goals. For most enterprises, RoCE and cloud will form the backbone, while hyperscalers and elite research labs continue to rely on InfiniBand.

As AI models grow larger and more complex, one thing is clear: the network is as important as the GPU in determining who leads the AI race.

Abbreviations

- AI – Artificial Intelligence

- LLM – Large Language Model

- GPU – Graphics Processing Unit

- TPU – Tensor Processing Unit

- NIC – Network Interface Card

- IB – InfiniBand

- RoCE – RDMA over Converged Ethernet

- RDMA – Remote Direct Memory Access

- HPC – High-Performance Computing

- NCCL – NVIDIA Collective Communications Library

- MPI – Message Passing Interface

- DDP – Distributed Data Parallel

- PFC – Priority Flow Control

- ECN – Explicit Congestion Notification

- CapEx – Capital Expenditure

- OpEx – Operational Expenditure

References

NVIDIA – InfiniBand Networking for AI and HPC

https://www.nvidia.com/en-us/networking/technologies/infiniband/NVIDIA – What is RDMA over Converged Ethernet (RoCE)?

https://www.nvidia.com/en-us/networking/ethernet/roce/AWS – Elastic Fabric Adapter (EFA)

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/efa.htmlMicrosoft Azure – ND A100 v4-series VMs with InfiniBand

https://learn.microsoft.com/en-us/azure/virtual-machines/ndv4-seriesGoogle Cloud – A3 Mega VMs with NVIDIA H100 GPUs

https://cloud.google.com/blog/products/compute/a3-megagpusMellanox (now NVIDIA Networking) – SHARP: Scalable Hierarchical Aggregation and Reduction Protocol

https://www.mellanox.com/related-docs/prod_acceleration_software/SHARP-Overview.pdfTOP500 – Supercomputer Rankings and Interconnect Trends

https://www.top500.org/statistics/interconnects/